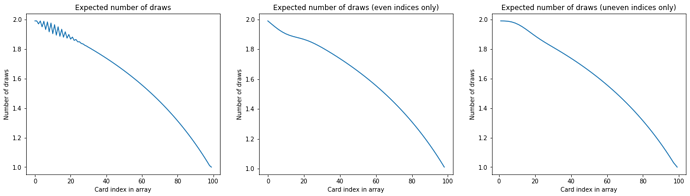

Let’s try to explain the linear relationship @dreev saw for a large number of cards. We will not be too rigorous.

Let T{\left(c, u\right)} be the expected number of turns remaining, given that there are c cards remaining of which we know u. Consider the aforementioned recurrence relation for the expectation of the number of turns remaining

\begin{align}T{\left(c,u\right)} &= 1 + \frac{u}{c-u} T(c-2, u-1) + \left(1 - \frac{u}{c-u}\right) \frac{1}{c-u-1}T(c-2, u) \\&+ \left(1 - \frac{u}{c-u}\right) \frac{u}{c-u-1}\left(1+T(c-2, u) \right) \\&+ \left(1 - \frac{u}{c-u}\right) \left( 1 - \frac{u+1}{c-u-1}\right)T(c, u+2) \end{align}

We seek an approximate scaling solution T{\left(c, u\right)} \approx c \tau{\left(u/c\right)} \equiv c \tau{\left(f\right)}. Here now \tau is a continuous function of a real-valued argument. Our solution will only be valid in the limit c\to\infty at fixed f.

The idea is to expand both sides of the equation in powers of 1/c and solve it at the lowest nontrivial order. The terms at different values of c, u on the right-hand side, in this continuum limit, are approximated as derivatives.

So we just put the right hand side of the recurrence relation in mathematica, in terms of our scaling function \tau, and ask it to expand everything in powers of 1/c.

The lowest order term is just c \tau{(f)} = c \tau{(f)}, which is automatically satisfied.

The first nontrivial order is order one (c^0), which gives the differential equation

1 - f - f^2 +2 f ( 3 f - 2) \tau{(f)}+ (2 - 9 f + 13 f^2 - 6 f^3) \tau'{(f)} = 0

The general solution to this equation (per Mathematica, persumably by pulling all the \tau to one side and integrating) is:

\tau{(f)} = \frac{1 +

2 (1 - f)^2\left( \phi + \log((2 - 3 f)/(1-f))\right)}{4 f - 2}

for any constant \phi.

The boundary condition is \tau{\left(1/2\right)} = 1/2. We may easily verify that this BC is satisfied by \phi=-2, by plugging that in and then taking the limit f \to 1/2.

Our desired limiting time to finish the game is then c \tau{\left(0\right)}. This evaluates to

\lim_{c\to\infty} \frac{T}{c}= \left(\frac{3}{2} - \log{2}\right) \approx 0.80685.

Since the number of cards is twice the number of pairs, this seems to agree with @dreev’s numerical 1.6137-ish