Disclaimer: I’m the founder of Forfeit (very similar to Beeminder) and am now building a company called Overlord. This is an AI agent that is designed to monitor you 24/7, and encourage you to stick to good habits, and not do bad habits. This is an essay about how AI can pretty much fix self-control very soon (through everyone having AI accountability partners), but of course I’m biased, as I’m building a company in this space.

For the majority of people, self-control is their #1 issue. Most people are drastically messing up in one element of their life due to not being able to control themselves. Thought experiment: If you had a friend following you around 24/7, would you be able to kick all your bad habits? Probably.

Let’s take the issue of obesity (40% of Americans right now). If an obese person had a friend following them around each day, they’d likely be able to eat 230 calories less each day (enough to lose 2lbs/month). This would be almost comically easy - your friend will just say “do you need large fries, or are medium OK?”, and everyone would be thin.

This can, of course, apply to everything. The following issues (think about these yourself), would be immediately fixed if you had a friend following you around encouraging you: porn addiction, exercising, doomscrolling, drinking too much…

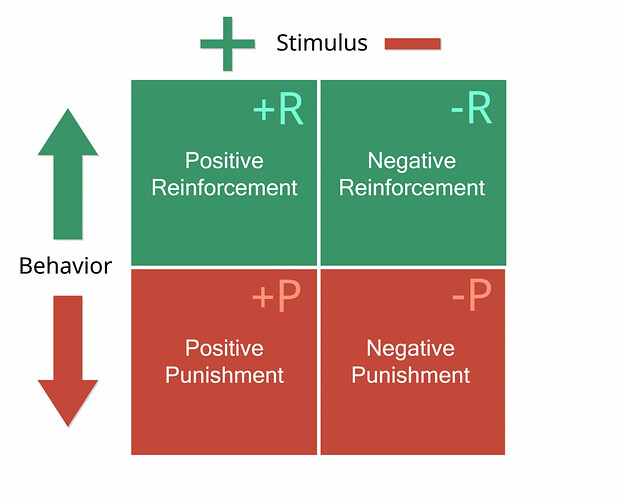

Now, imagine instead of a friend, it’s an AI. Of course, you feel no shame towards an AI, as you would a friend, yet. This means you’d need to jerryrig pain into it: It can charge you money (LessWrong - Losing money or completing habits), call you to persuade you, text your friends, call your mum. But if done intelligently - striking a balance between being too nice and too mean - it should be pretty close. And pretty close to a fix for self-control is the Holy Grail for most people.

How far off are these AIs? These will come when the AI personal assistants come, and, in my opinion, would be more valuable to a lot of people. 24/7 accountability partners aren’t a job that we can replicate (as paying a human 24/7 is too expensive), so they’re not discussed as much as personal assistants, but they will be incredibly valuable. Would you rather have a chatbot who can book hotels and flights for you, or quit smoking?

Real-world example:

Now let’s talk about how easy it is to overcome most bad habits (of course - I’m not talking about genuine addictions). I have a bad habit of smoking cigarettes - I don’t care if I’m drunk, but I can’t say no if a friend offers me one when I’m sober. They’re incredibly easy to resist, like a 1/10 in the moment, but for some reason this doesn’t happen. Let’s compare that with some deterrance mechanisms:

Texting my mum telling her I smoked: 5/10 (I wouldn’t smoke)

Losing $5: 3/10 (I wouldn’t smoke)

Having to spend 5 minutes sat in silence: 4/10 (I wouldn’t smoke).

As long as the deterrance mechanism score higher than the negative action, I wouldn’t do it. And it takes a shockingly simple deterrance mechanism to counteract a negative action, and therefore break a lifelong bad habit.

Now the deterrance mechanism is fixed, how can we monitor people? We ingest all the personal data (ie, an AI Overlord), and piece it together from there. We spend about 10 hours a day on screens, so that leaves ~6hrs where we don’t know what a user is doing. The obvious ones work: Location, credit card transactions, simply asking the person what they’re doing.

Crucially, from this, just as a human would, an intelligent AI can piece things together. If I’m trying to quit drinking, and the AI sees that it’s 9pm on a Friday, I’m at a bar, and I spend $9 on my card, it can easily suss out that I’m probably drinking. It can recognise patterns and weak points very easily. It doesn’t need to prevent you from doing your bad habits every minute of the day, just when you’re weak - each minute will have a different “Chance of relapse score” depending on the time of day, how much sleep you had last night, and in general how suspicious this AI is that you’re close to relapsing.

AI will create the perfect conditions to create addicts

Here’s what’s likely to happen, and why we will need this more than ever.

- Loss of purpose: People will no longer have purpose through their jobs, as AI does it better than them. Purpose fends off addiction/bad habits very well.

- Economic displacement: Until we figure out UBI, people will lose their jobs and have no money. Poorer people are more likely to be addicts.

- Abundance of time: These jobless, purposeless, broke people will now have 16 hours a day to fill. Abundance of time with nothing to do is a recipe for addiction.

- AI Superstimuli: AIs will very soon be the most charismatic, caring, intelligent people you’ve ever spoke to. Most people’s friends would be a 5/10 in the following: Attractiveness, Intelligent, Interestingness, Empathy. If you could facetime a person who’s a 10/10 in all these qualities, would you still find it fun doing anything else?

- Social withdrawal: People’s friends will start to drop off the map due to this. With less and less friends, people will socialise less.

In short: We will very soon be purposeless, broke, bored, lonely, and a superintelligent, superattractive AI will step in - most of society won’t be able to resist. I argue that we simply won’t be able to resist - we will need an “AI Iron Dome” to protect from this new superstimuli.

We already have guardrails imposed on us by society: I wouldn’t stand up and shout profanities in a coffee shop, as it’s socially embarrasing. I wouldn’t ignore my boss as it’s financially painful. There are thousands of things that you could do at any moment in time, but due to these invisible guardrails we have around us, we can only do two or three. Most people wouldn’t even leave the line at the coffee shop as it’s a little bit weird. These defence AIs would do the same thing: It would let you set your own guardrails on your life, so you never do something future you would regret.

So, we will soon have two very powerful competing AIs: The AI “Superfriends”, and the AI “Iron Domes”. They won’t be able taking away your agency, just aligning your actions with what you in 24 hours would want you to do. Right now we have 100% control over our actions moment-to-moment, which is disastrous. We should have 90-95% control of what we are doing, and the other 5-10% should be controlled by an AI, aligned with our future, rational self. This seems dystopian now, but we will likely have no choice in the matter.

Practical examples

These are essentially the same as what you may tell a friend to do if they had a certain element of control over you. Here are some examples:

- “In my own voice, call me at 7am and give me a pep talk. Every minute I’m not awake past 7am, charge me $0.10”

- “For each rep of a posture exercise I do, give me one minute on Instagram”

- “I tend to smoke weed when I go to Jake’s, and want to stop. Make me send a photo of my eyes every time I leave.”

- “Only allow me on my phone when I have no events on my calendar”

- “Make sure I take max three Zyns a day (must send photo of canister each morning)”

- “When I go out, gently push me to get home. When I wake up hungover, intelligently motivate me with financial penalties to leave the house and get to the gym right as I wake up.”

- “I’m getting home now - make sure I lock in on my Mac with 45 min pomodoros with 15 min spacing (must send video of myself just lying down, decompressing) until 6pm”

- More examples that users have shared here (Overlord Community Goals)